The 2D bubble spirit level is a simple tool which is easy to understand and use. It does not take long to learn to tilt the bubble in the opposite direction to move it inside the circle in the middle. Given how easy it is to use a visual spirit level you might think that a sonic version could also be easy to understand and use.

But what should a spirit level sound like ? How do sounds tell you to tilt it in different directions ? Should it go quiet when it is level or should it give a loud beep ? Could you really use sound alone to level the bubble ? Would one sound design be better than any other or would they all work equally well ? Does the addition of the sounds to the visual interface make the task easier or harder ?

In order to explore these questions I built three Sonic Tilt Apps with different sound designs and tried them out. The Apps were compared by timing how long it took to reach the level position over 10 trials in the Visual, then Sonic, then Visual+Sonic conditions in each App. The results in Table 1. show the mean time to level from a random starting point for 10 trials in each condition for each App, one after the other in series.

| SpiritLevel App | Visual (s) | Sonic (s) | Visual+Sonic (s) |

| App1. Tuning | 16.4 | 23.8 | 14.2 |

| App 2. Pobblebonk | 14.9 | 20.9 | 9.6 |

| App 3. Crickets | 6.4 | 22.1 | 7.6 |

Table 1. Mean time (seconds) to level over 10 trials from random starting position.

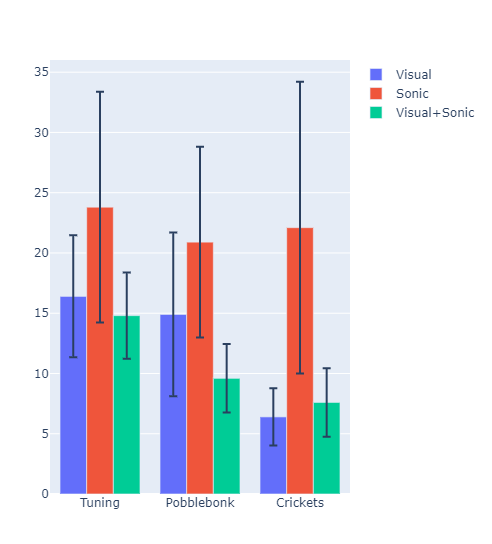

Figure 1. Mean time (seconds) to level, with Standard Deviation on error bars.

The data shows measurements for three different designs (Tuning, Pobblebonk, and Crickets) across three conditions (Visual, Sonic, and Visual+Sonic), along with their spread shown by Standard Deviation on the error bars. From the visualization, we can observe that:

The Sonic condition generally shows higher values across all designs.

The Visual+Sonic combination shows lower values than either condition alone.

Crickets design shows the most variation between conditions.

Tuning and Pobblebonk show somewhat similar patterns.

The Sonic condition shows notably larger error bars across all designs, suggesting more variability in these measurements. The Visual+Sonic combination shows relatively smaller error bars, indicating more consistent measurements in this condition.

The Visual mean for Tuning and Pobblebonk is around15s, but it drops to 6.4s for Crickets where the trials are also more consistent. This improvement could be due to a learning curve for hand-eye coordination that begins to cut in after the first 60 trials. The colour scheme for each App is different, so the improvement might be because the high contrast yellow and black in Crickets is more effective.

The Sonic mean is similar for all three Apps, at around 22s. The ability to complete the task in all 90 trials using sound alone supports the hypothesis that sound can provide the information needed to perform a 2D levelling task. However there is no difference due to the different sound designs in each App, and no sign of a learning effect when using sound across all 90 trials. The spread in the Sonic condition is wide and increases in Crickets. Overall Pobblebonk has the lowest mean and lowest spread, so could be the most promising for further iteration.

The mean for the Visual+Sonic condition is lower than for the Visual condition in Tuning and Pobblebonk, and a bit higher in Crickets. This could be the sound providing additional information in the first two cases. and distracting information in the third. The spread in the Visual+Sonic condition is similar across all three Apps, similar to the most consistent result for the Visual condition in Crickets, and about half of that for the Visual condition in Tuning and Pobblebonk. This suggests that the addition of the sounds results in more consistent performance than in the visual only condition.

These results are personal and not intended to be general. The quick and dirty evaluation is a way to reflect on the hypotheses and assumptions underlying the many choices made in each design, as a basis for further iterative prototyping. Some questions raised by the results of this small pilot are :

1) Is the improvement in the Visual results for the third App due to learning or the colour scheme ?

2) Is there a learning effect for the Sonic condition that takes longer than 10 trials ?

3) If the Visual and Sonic conditions are trained, does the combined Visual+Sonic results also improve, or does it have to be trained as well ?

4) Is the worse Sonic+Visual performance in the third App repeatable, and if so is it the Cricket sound that is causing distraction or is it the minimal Sonic information that is causing the deterioration in performance ?

The observations from this further study could inform the design of more formal randomized trials that control for learning effects and provide results with statistical significance.